From Fiction to Formal Standards

Early discussions about robot safety and trust were shaped by fictional concepts long before formal engineering standards existed. The most widely known example is Isaac Asimov's Three Laws of Robotics, which articulated intuitive ideas about harm prevention and human protection.

While influential in cultural and ethical discourse, these laws were never intended as operational, technical or regulatory frameworks. They lack mechanisms for risk assessment, verification, fault handling and accountability under real-world conditions.

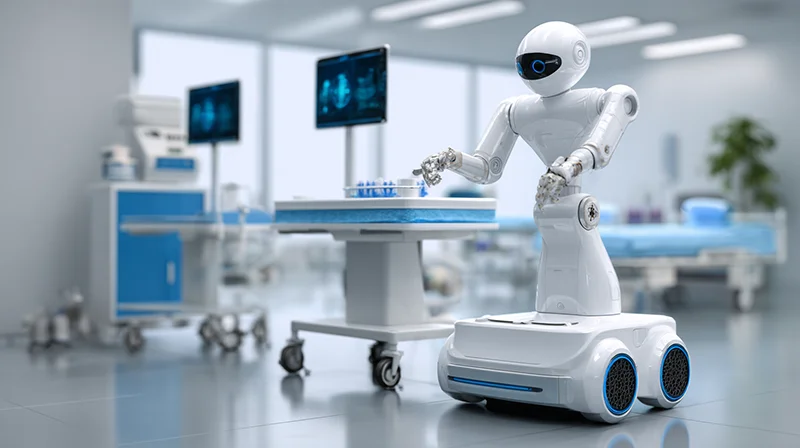

Modern service robotics replaces fictional constraints with formal standards, engineering controls and governance structures. Trust is not assumed through rules, but established through defined terminology, safety requirements, system reliability and auditable deployment practices.

This shift from narrative principles to standards-based frameworks marks the transition from conceptual ethics to responsible, scalable deployment.

These narratives remain relevant for ethical reflection, but they are not referenced in contemporary standards, certification processes or deployment assessments.